Free Robots.txt Generator

Select your crawling preferences to generate a fully optimized robots.txt file.

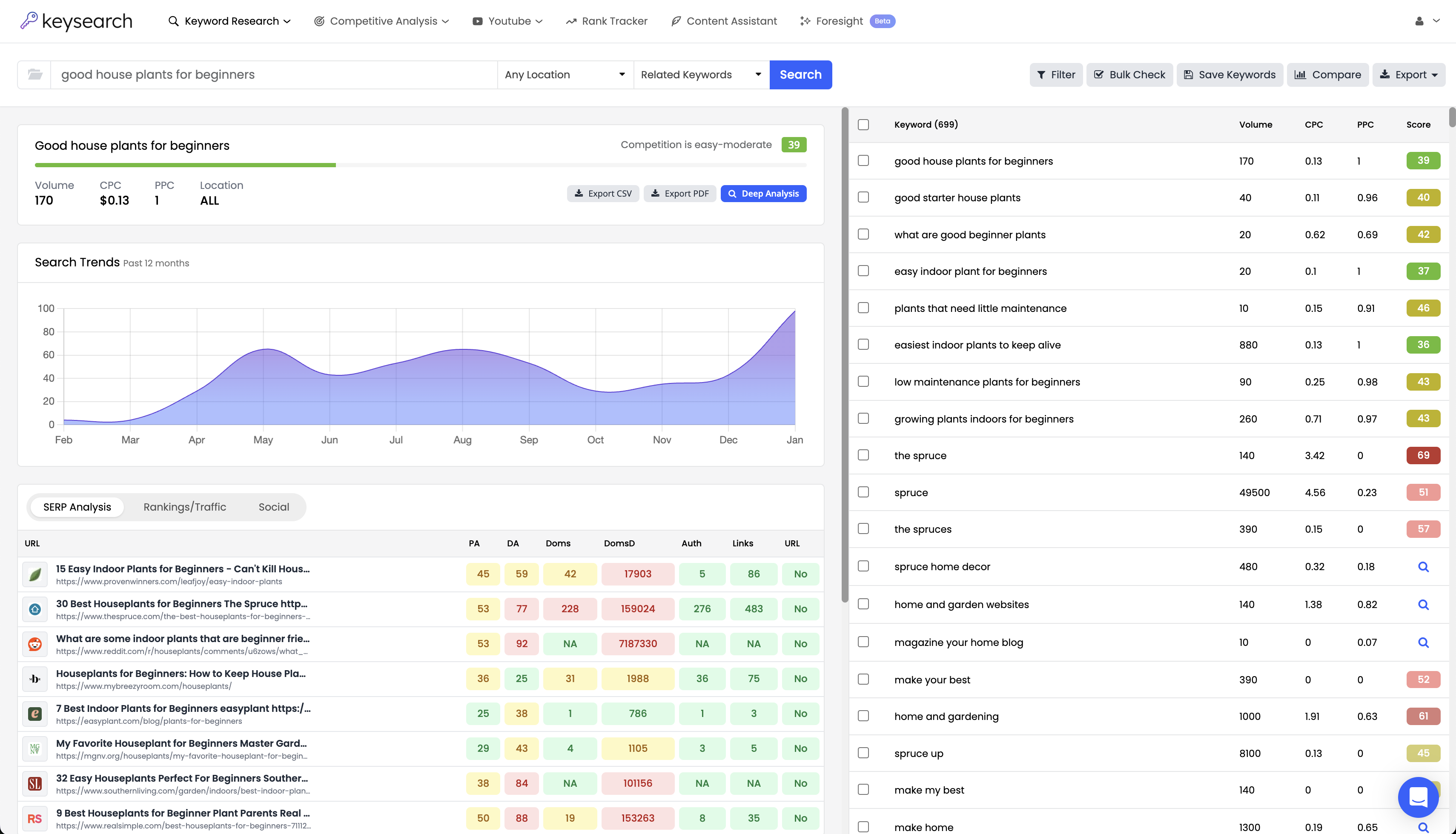

Want to take your keyword research to another level?

Sign up for free.

Why a Robots.txt File Generator is a Must For Any Website Owner or SEO

The more you know about how search engines work, the more you can tweak your website to your advantage and improve your SEO. One thing not many people know about is the robots.txt file. The name might sound confusing or technical, but you don't need to be an SEO expert to understand and use a robots.txt file.

Also called the robots exclusion protocol or standard, a robots.txt file is a text file present within your website that either allows or prevents Google and other search engines from:

- Accessing the entirety of a website

- Accessing only certain pages of a website

- Indexing a website

Search engines check the instructions within the robots.txt file before they start crawling a website and its content. A robots.txt file is useful if you don't want certain parts of your website to be searchable, like Thank You pages or pages with confidential or legal information.

To check whether your website already has a robots.txt file, go to the address bar in your browser and add

/robot.txt to your domain name. The URL should be:

http://www.yourdomainname.com/robots.txt.

You can also log into your hosting website account, go to the file management interface, and check the root directory.

If your website already has a robots.txt file, there are some additions you can make to further help optimize your SEO. If you can't find a robots.txt file, you can create one – it's very easy with our free robots.txt file generator!

What Makes Our Solution the #1 Choice?

You have access to so many free robots.txt generator tools online, many of which are free. However, the problem with most other options is how complicated they make generating your file.

We've simplified it for you with this free robots.txt file generator. It's 100% free to use and you can get started without even creating an account. Just submit your requirements for the file - dictate which crawlers you want to allow and which you don't. You don't even need to submit a sitemap if you don't want to!

You can join 10,000 individuals who rely on our free SEO tools to map out and execute their SEO strategies. We'll walk you through how to use our free robots.txt generator below.

How to Use Our Free Robots.txt Generator

Our robots.txt file generator quickly creates robots.txt files for your website. You can either open and edit an existing file or create a new one using the output of our generator.

Depending on your preferences, you have the option to easily pick which types of crawlers (Google, Yahoo, Baidu, Alexa, etc.) to allow or disallow. Likewise, you can add other directives like crawl delay with only a few clicks, instead of typing everything from scratch.

Compare that to the traditional approach of creating a robots.txt file. You'd have to open NotePad for Windows or TextEdit for Mac to create a blank TXT file. Name it "robots.txt", and then you can get to work adding the instructions you want.

If you want all robots to access everything on your website, then your robots.txt file should look like this:

User-agent: *Disallow:

Basically, the robots.txt file here disallows nothing, or in other words, is allowing everything to be crawled. The asterisk next to "User-agent" means that the instruction below applies to all types of robots.

On the other hand, if you don't want robots to access anything, simply add the forward slash symbol like this:

User-agent: *Disallow: /

Note that one extra character can render the instruction ineffective, so be careful when editing your robots.txt file.

In case you want to block access to a specific type of GoogleBots, like those that search for images, you can write this:

User-agent: googlebot-images Disallow: /

Or if you want to block access to a certain type of files, like PDFs, write this:

User-agent: * Allow: / # Disallowed File Types Disallow: /*.PDF$

If you want to block access to a directory within your website, for example, the admin directory, write this:

User-agent: * Disallow: /admin

If you want to block a specific page, simply type its URL:

User-agent: * Disallow: /page-url

And if you don't want Google to index a page, add this instruction:

User-agent: * Noindex: /page-url

If you're not sure what indexing means, it's simply the process that makes a page part of web searches.

Lastly, for big websites that are frequently updated with new content, it's possible to set up a delay timer to prevent servers from being overloaded with crawlers coming to check for new content. In a case like this, you could add the following directive:

User-agent: * Crawl-delay: 120

Thus all robots (except for Googlebots, which ignore this request) will delay their crawling by 120 seconds, preventing many robots from hitting your server too quickly.

There are other kinds of directives you can add, but these are the most important to know.

When you are done with the instructions, upload the robots.txt file to the root of your website using an FTP software like FileZilla or the file manager that your hosting provider provides. Note that if you have subdomains, you should create robots.txt files for each subdomain.

Don't work harder when you could work smarter with our robots.txt file generator. Get started at Keysearch today and optimize your website for better rankings! If you still have any questions about using our robots.txt generator, get in touch with our customer service team.

FAQs for Our Free Robots TXT Generator

Why is a Robots.txt File Important?

A robots.txt file controls how search engines crawl and index your website. It allows you to specify which parts of your site should be accessible to crawlers and which should be restricted.

Without one, your website can be bombarded by third-party crawlers trying to access its content, slowing load times and sometimes causing server errors. Loading speed affects the experience of website visitors, many of whom will leave your site if it doesn't load quickly. There are privacy reasons to disallow certain crawlers, too.

What's the Difference Between Robots.txt and Sitemap?

A robots.txt file instructs search engine crawlers on which pages or directories to avoid or prioritize when crawling your site, while a sitemap is a file that lists all the pages on your website, helping search engines discover and index your content more efficiently.

In essence, the robots.txt file tells search engines where not to go, while the sitemap tells them where they should go. Both are important, but they're very different.

What is Syntax?

Syntax refers to the specific format and rules that must be followed when writing a robots.txt file. This includes using the correct commands, such as User-agent, Disallow, Allow, and Crawl-delay.

Proper syntax setup also ensures they are written in a way that search engine crawlers can understand. Incorrect syntax can lead to errors in how crawlers interpret your instructions.

How Do I Disallow?

To prevent search engine crawlers from accessing specific pages or directories, you use the Disallow directive in your robots.txt file. For example, if you want to block all crawlers from accessing a directory named "private," you would add the following line to your file:

User-agent: * Disallow: /private/

This tells all user-agents (crawlers) not to crawl the "private" directory. It's as easy as a few clicks with our robots TXT generator.

How Do I Add My Sitemap to the Robots.txt File?

To help search engines discover your sitemap, you can add a Sitemap directive in your robots.txt file. This is done by simply adding a line that points to the URL of your sitemap. For example:

Sitemap: http://www.yourdomainname.com/sitemap.xml

This line should be placed at the end of your robots.txt file.

How Do I Submit the Robots.txt File to Google?

Once you've created or updated your robots.txt file using our robots txt generator free, you can submit it to Google through Google Search Console. After logging in, go to the "Crawl" section and select "robots.txt Tester."

From there, you can test your file and then submit it directly to Google. This ensures that Google is aware of your robots.txt file and follows the instructions within it.

What is a User-Agent and How Do I Define it?

A User-agent in a robots.txt file specifies which search engine crawlers (bots) the rules apply to. Each search engine has its own user-agent, such as Googlebot for Google and Bingbot for Bing. To define a user-agent in your robots.txt file, you start with the User-agent directive followed by the name of the crawler. For example:

User-agent: Googlebot

You can also use an asterisk (*) to apply the rules to all user-agents:

User-agent: *

What is the Allow Directive and How Do I Use it?

The Allow directive in a robots.txt file is used to grant specific permission for a crawler to access a particular page or directory, even if broader disallow rules are in place. For instance, if you've disallowed a whole directory but want to allow access to a specific file within it, you would write:

User-agent: * Disallow: /private/ Allow: /private/special-file.html

This setup disallows the entire "private" directory except for the "special-file.html."

Are There Any Other SEO Tools Available at Keysearch?

As a matter of fact, you gain access to a comprehensive suite of SEO tools when you set up with Keysearch. You'll have everything you need to research keyword opportunities and execute your strategy with precision. Here are our most popular offerings:

- Niche finder

- Keyword density checker

- Keyword difficulty checker

- LSI keyword generator

- SERP simulator

- Long tail keyword generator

- Duplicate content checker

- Keyword clustering tool

But if you're trying to rank products or content on a specific search engine that isn't Google, you can also use one of our more specialized tools to find the top keywords. We have a YouTube keyword generator, Pinterest keyword tool, Amazon keyword tool, Etsy keyword tool, and more.

Whether you're running a blog or setting up an e-commerce store, use our solutions to guide your content creation efforts and set yourself up to dominate the rankings in your niche!